from textwrap import dedent

from typing import Literal

import requests

from dotenv import load_dotenv

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_core.tools import tool

from langchain_openai import ChatOpenAI

from langsmith import traceable

from pydantic import BaseModel, Field

load_dotenv()Function calling and structured outputs in LLMs with LangChain and OpenAI

Function calling and structured outputs let you go from chatbots that just talk to agents that interact with the world. They’re two of the most important techniques for building LLM applications.

Function calling let LLMs access external tools and services. Structured outputs ensure that the data coming back from your models is ready to integrate

These are two of the most important techniques for building LLM applications. I can tell you that mastering them will make your applications better and easier to maintain.

In this tutorial, you’ll learn:

- How function calling and structured outputs work and when to use them

- How to implement both techniques using LangChain and OpenAI

- Practical examples you can run and adapt for your own projects.

Let’s get started.

Function calling

Function calling refers to the ability to get LLMs to use external tools or functions. It matters because it gives LLMs more capabilities, allows them to talk to external systems, and enables complex task automation. This is one of the key features that unlocked agents.

The usual flow is:

- The developer sets up an LLM with a set of predefined tools

- The user asks a question

- The LLM decides if it needs to use a tool

- If it does, it invokes the tool and gets the output from the tool.

- The LLM then uses the output to answer the user’s question

Here’s a diagram that illustrates how function calling works:

AI developers are increasingly using function calling to build more complex systems. You can use it to:

- Get information from a CRM, DB, etc

- Perform calculations (e.g., generate an estimate for a variable, financial calculations)

- Manipulate data (e.g., data cleaning, data transformation)

- Interact with external systems (e.g., booking a flight, sending an email)

Structured outputs

Structured outputs are a group of methods that “ensure that model outputs adhere to a specific structure”1. With proprietary models, this usually means a JSON schema. With open-weight models, a structure can mean anything from a JSON schema to a specific regex pattern. You can use outlines for this.

Structured outputs are very useful to create agentic systems, as they simplify the communication between components. As you can imagine, it’s a lot easier to parse the output of a JSON object than a free-form text. Note, however, that as with other things in life, there’s no free lunch. Using this technique might impact the performance of your task, so you should have evals in place.

In the next sections, I’ll show you how to use function calling and structured outputs with OpenAI.

Prerequisites

To follow this tutorial you’ll need to:

- Sign up and generate an API key in OpenAI.

- Sign up and generate an API key in LangSmith.

- Create an

.envfile with the following variables:

- Create a virtual environment in Python and install the requirements:

Once you’ve completed the steps above, you can run copy and paste the code from the next sections. You can also download the notebook from here.

Examples

As usual, you’ll start by importing the necessary libraries.

You’ll use LangChain to interact with the OpenAI API and Pydantic for data validation.

Now that you’ve imported the libraries, we’ll work on three examples:

- Providing a model with a single tool

- Providing a model with multiple tools

- Generating a structured output from a model

Function calling with a single tool

First you start by defining the model and the tool:

model = ChatOpenAI(model_name="gpt-4.1-mini")

@tool

def find_weather(latitude: float, longitude: float):

"""Get the weather of a given latitude and longitude"""

response = requests.get(

f"https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m,wind_speed_10m&hourly=temperature_2m,relative_humidity_2m,wind_speed_10m"

)

data = response.json()

return data["current"]["temperature_2m"]

tools_mapping = {

"find_weather": find_weather,

}

model_with_tools = model.bind_tools([find_weather])This code sets up a gpt-4.1-mini model with a single tool. To define a tool, you must define a function and use the @tool decorator. This function must necessarily have a docstring because this will be used to describe the tool to the model. In this case, the tool is a function that takes latitude and longitude values and returns the weather for that location by making a call to the Open Meteo API.

Next, you need to tell your code how to find and use your tools. This is the purpose of tools_mapping. It is a common point of confusion. The LLM doesn’t run the tools on its own. It only decides if a tool should be used. After the model makes its decision, your own code must make the actual tool call.

In this situation, since you only have one tool, a mapping isn’t really necessary. But if you were using multiple tools, which is often the case, you would need to create a “map” that links each tool’s name to its corresponding function. This lets you call the right tool when the model decides to use it.

Finally, you need to bind the tool to the model. The binding makes the model aware of the tool, so that it can use it.

Then, let’s define a function that lets you call the model with the tool.

@traceable

def get_response(question: str):

messages = [

SystemMessage(

"You're a helpful assistant. Use the tools provided when relevant."

),

HumanMessage(question),

]

ai_message = model_with_tools.invoke(messages)

messages.append(ai_message)

for tool_call in ai_message.tool_calls:

selected_tool = tools_mapping[tool_call["name"]]

tool_msg = selected_tool.invoke(tool_call)

messages.append(tool_msg)

ai_message = model_with_tools.invoke(messages)

messages.append(ai_message)

return ai_message.content

response = get_response("What's the weather in Tokyo?")

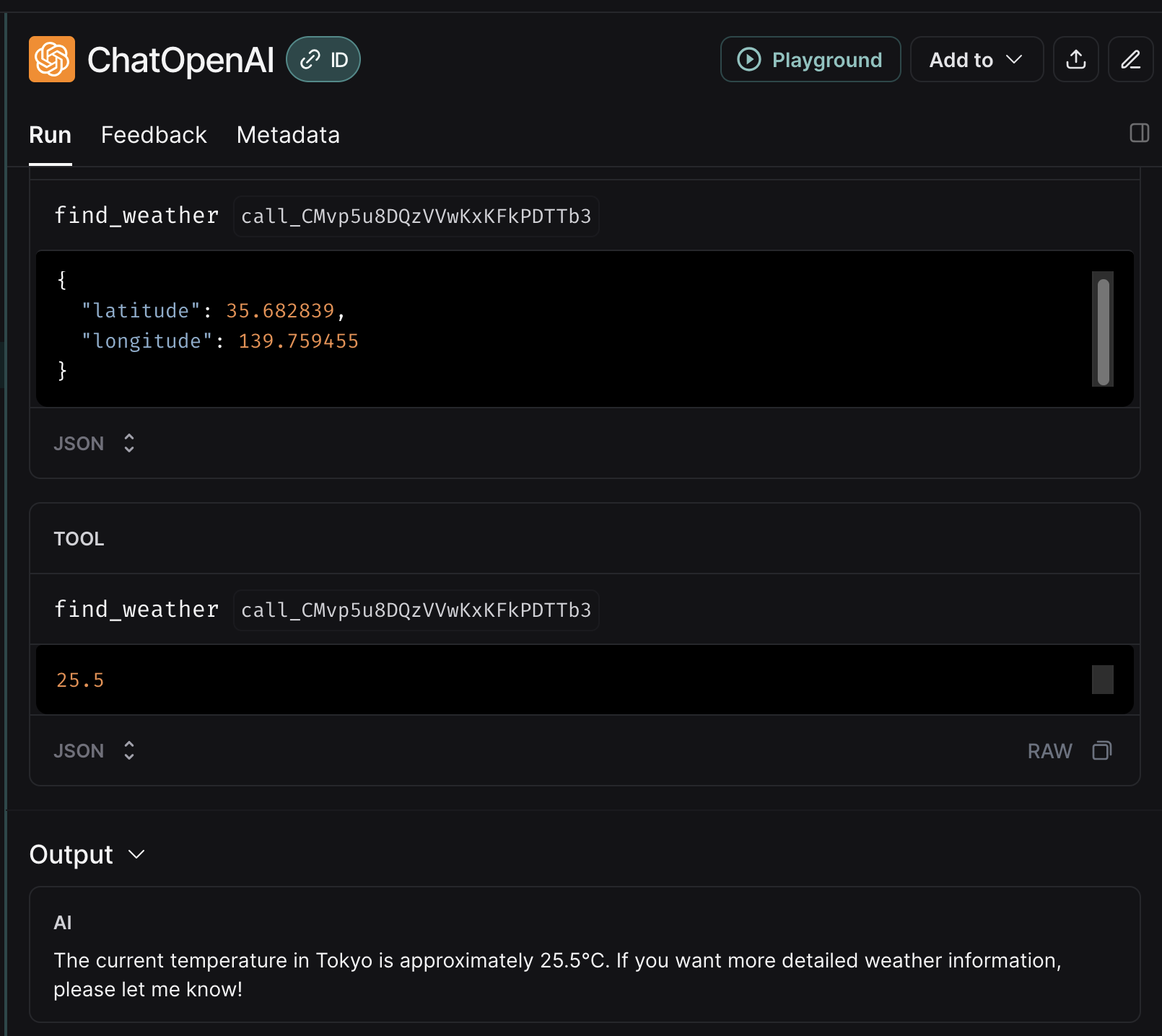

print(response)The current temperature in Tokyo is approximately 25.5°C. If you want more detailed weather information, please let me know!This function takes a city name and returns the weather for that city. It uses the find_weather tool to get the weather data.

It works as follows:

- Line 1 adds a LangSmith’s

traceabledecorator to the function, so that you can see the trace of the function in the LangSmith UI. If you prefer to not use LangSmith, you can remove this line. - Lines 2 to 10 set up the prompts and call the model.

- Lines 12 to 16 is where the magic happens. This is a loop that will check if there’s been a tool call in the response from the model. If there is, it will call (invoke) the tool and add the result to the messages.

- Lines 17 to 18 the model is called again to get the final response.

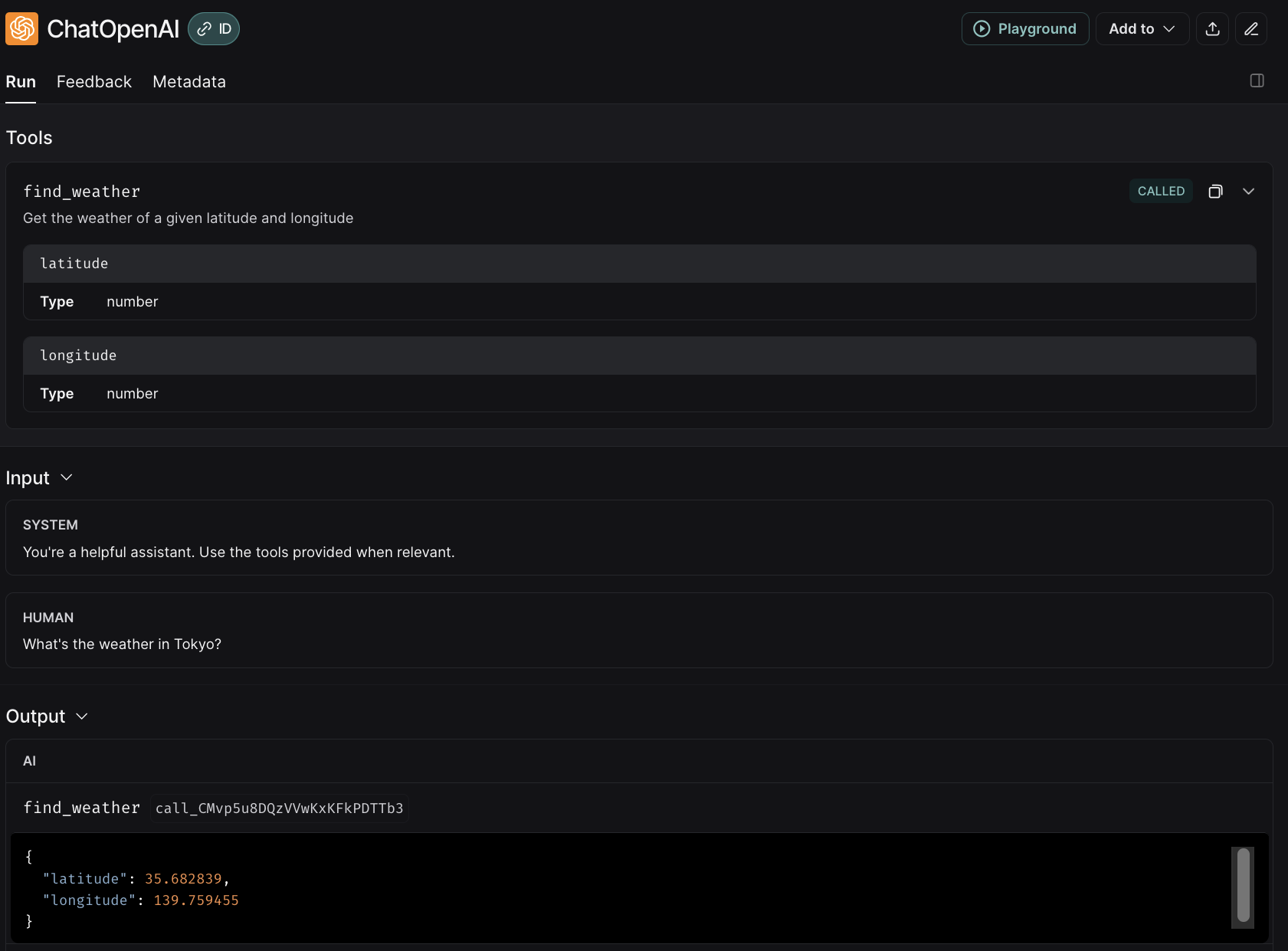

When you run this code, you’ll get a text response with the weather for the city you asked for. If you check the trace, you can see how the whole process works:

There are three steps in the process:

- Initial model call with the question from the user.

- Tool call to get the weather data.

- Final model call to get the response.

If you dig deeper into the first model call, you’ll see how the tool is provided to the model and how the model decides to use it:

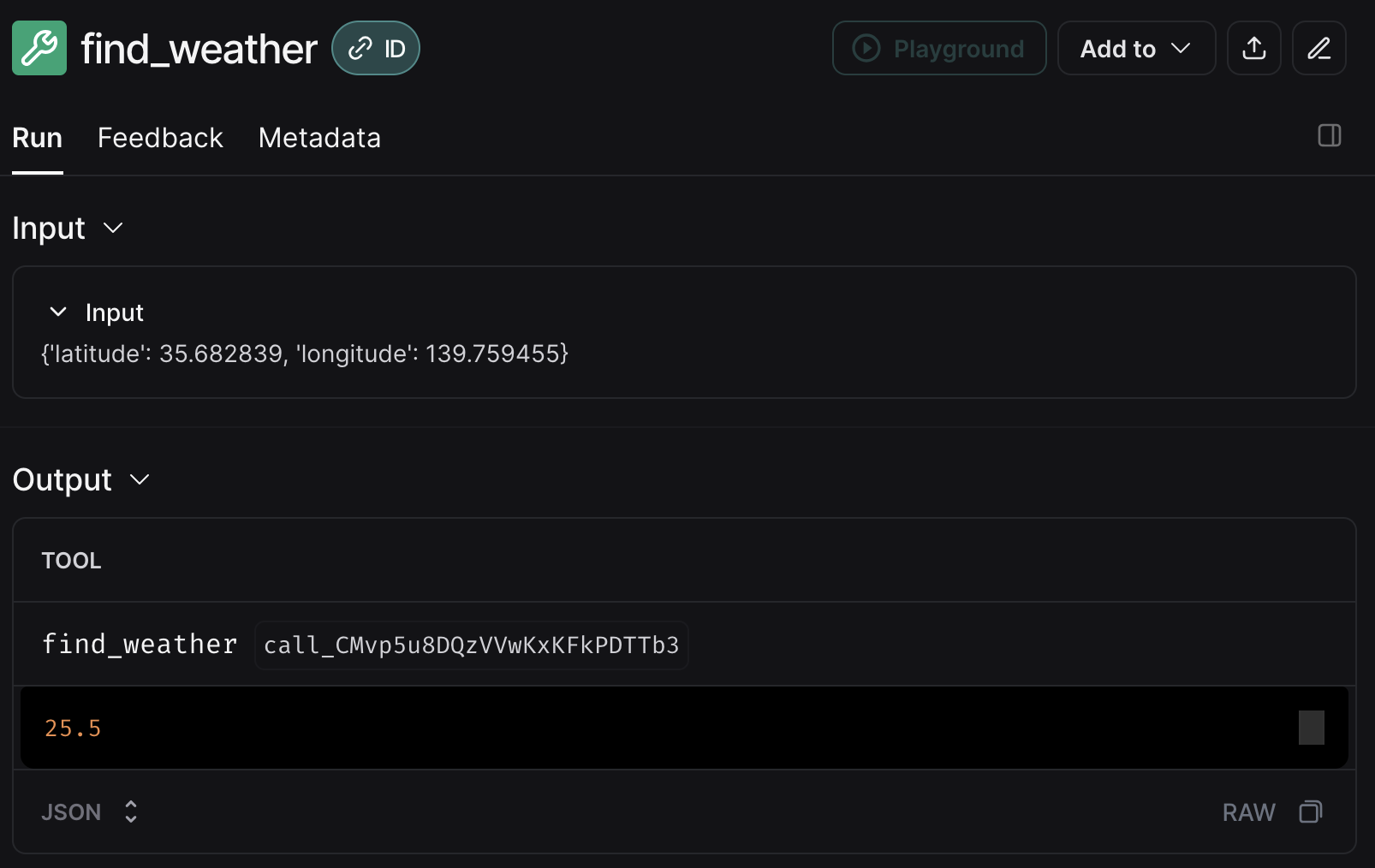

The tools is provided by describing it to the model using the docstring of the function. The parameter and their types are also provided. Then the model responds specifying the name of the tool it wants to use and the parameters it wants to pass to it. This tool is then called:

The results of the tool call are then passed to the model again. The model then uses the result to generate the final response:

That’s it. This how you provide a model with tools. In the next section, you’ll see how to use multiple tools.

Function calling with multiple tools

Similar to the previous example, you start by defining the tools (using the @tool decorator) and binding them to the model.

In addition to get_weather, you’ll also define a tool to check if a response follows the company guidelines. In this case, the company guidelines are that responses should be written in the style of a haiku.2

model = ChatOpenAI(model_name="gpt-4.1-mini")

@tool

def get_weather(latitude: float, longitude: float):

"""Get the weather of a given latitude and longitude"""

response = requests.get(

f"https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m,wind_speed_10m&hourly=temperature_2m,relative_humidity_2m,wind_speed_10m"

)

data = response.json()

return data["current"]["temperature_2m"]

@tool

def check_guidelines(drafted_response: str) -> str:

"""Check if a given response follows the company guidelines"""

model = ChatOpenAI(model_name="gpt-4.1-mini")

response = model.invoke(

[

SystemMessage(

"You're a helpful assistant. Your task is to check if a given response follows the company guidelines. The company guidelines are that responses should be written in the style of a haiku. You should reply with 'OK' or 'REQUIRES FIXING' and a short explanation."

),

HumanMessage(f"Current response: {drafted_response}"),

]

)

return response.content

tools_mapping = {

"get_weather": get_weather,

"check_guidelines": check_guidelines,

}

model_with_tools = model.bind_tools([get_weather, check_guidelines])This code defines the tools and binds them to the model. Just like we did before, you also need to define a mapping of the tools, so that you can call the right tool when the model decides to use it.

@traceable

def get_response(question: str):

messages = [

SystemMessage(

"You're a helpful assistant. Use the tools provided when relevant. Then draft a response and check if it follows the company guidelines. Only respond to the user after you've validated and modified the response if needed."

),

HumanMessage(question),

]

ai_message = model_with_tools.invoke(messages)

messages.append(ai_message)

while ai_message.tool_calls:

for tool_call in ai_message.tool_calls:

selected_tool = tools_mapping[tool_call["name"]]

tool_msg = selected_tool.invoke(tool_call)

messages.append(tool_msg)

ai_message = model_with_tools.invoke(messages)

messages.append(ai_message)

return ai_message.content

response = get_response("What is the temperature in Madrid?")

print(response)Sunny Madrid basks,

Thirty-six degrees embrace,

Summer's warm caress.This code is pretty much the same as the previous example, but with two tools. There’s also a one small difference.

Previously, we checked for tool calls once. Now, we’ll use a while loop that keeps checking. So, instead of the model having to provide the final answer after one turn, it can now ask for tools multiple times in a row until it has all the information it needs.

This is the core idea behind how agents work. So, congratulations, you’ve just built a simple agent! If you check the process in LangSmith, you’ll see how these turns play out.

Next, let’s see how to use structured outputs.

Structured outputs

Structured outputs are a set of methods used to get model outputs that follow a specific structure. This is useful when you want to get a specific type of output, such as a JSON object.

It’s easy to set up with proprietary models. With LangChain, you can define a dict or a Pydantic model to describe the output. I recommend using Pydantic models.

For example, let’s define a Pydantic model that will help us classify document into categories:

This model defines the structured output we’ll get from the model. It has two fields: category and summary.

Then, you can use the with_structured_output method to create a model that will return the structured output:

model = ChatOpenAI(model="gpt-4.1-mini", temperature=0)

def get_document_info(document: str) -> DocumentInfo:

model_with_structure = model.with_structured_output(DocumentInfo)

response = model_with_structure.invoke(document)

return response

document_text = dedent("""

This is a document about cats. Very important document. It explain how cats will take over the world in 20230.

"""

)

document_info = get_document_info("I'm a document about a cat")

print(document_info)category='pets' summary='A document about a cat.'After running this code, you’ll get a structured output with the category and summary of the document that you can then use in further steps of your workflow.

Depending on the provider, you’ll have different options to get structured outputs. OpenAI offers three different methods:

function_calling: This uses the tool calling mechanism to get the structured output.json_mode: This method ensures you get a valid JSON object, but it’s not clear how it works under the hood.json_schema: This is default method in LangChain. It ensures that the output is a valid JSON object and that it matches the schema you provide using constrained decoding.

Gemini and Anthropic provide their own methods to get structured outputs.

One thing to keep in mind is that structured outputs can impact performance. I’ve written multiple posts about this topic, so I won’t go into detail here.

Conclusion

Function calling and structured outputs are powerful tools that help build more capable AI systems. They’re also the foundation of agents.

Function calling is a way to provide LLMs with tools to use. It lets you go from building a chatbot that can only talk to building an AI assistant that can actually interact with the world. It opens up a world of possibilities, from connecting to databases, calling APIs, or automating workflows.

Structured outputs are just as important. They’re critical to integrating LLMs into existing systems. Instead of struggling with parsing free-form text, you get clean, predictable data structures that you can use in your code.

The examples in this tutorial should give you a sense of how to use these methods. But as usual, the real learning happens when you start applying these concepts to your own problems. Pick a task you’re working on, see if any of these methods can help you, and give it a try.

If you have any questions or comments, let me know in the comments below.

Footnotes

“We Need Structured Output”: Towards User-centered Constraints on LLM Output. MX Liu et al. 2024↩︎

Please don’t judge me. Companies do all sorts of weird things these days.↩︎

Citation

@online{castillo2025,

author = {Castillo, Dylan},

title = {Function Calling and Structured Outputs in {LLMs} with

{LangChain} and {OpenAI}},

date = {2025-07-01},

url = {https://dylancastillo.co/posts/function-calling-structured-outputs.html},

langid = {en}

}